What is Deep Learning?

Deep learning is a subset of machine learning, a neural network with more than two layers.

To varied degrees of success, these neural networks try to imitate the human brain’s working, allowing it to “learn” from vast amounts of data.

While a single-layer neural network can still produce approximate predictions, additional hidden layers can assist in optimising and tuning for accuracy.

Deep learning, a subtype of machine learning, is altering industries, revolutionising technologies, and unlocking previously unimaginable possibilities.

In this blog article, we go into deep learning to understand its foundations, applications, and the significant impact it continues to have on the future of AI.

Andrew Ng, a very renowned person in the field of Deep Learning, who is also the founder of DeepLearning.

AI depicted the essence of deep learning with a cartoon pictorial in one of his videos:

Deep learning V/S Machine Learning

Is Deep Learning and machine learning the same?

Let’s understand the answer by jotting down the differences between both:

| DEEP LEARNING | MACHINE LEARNING | |

| Definition | A machine learning subfield that focuses on multi-layered neural networks. (deep neural networks). It tries to imitate the architecture of the human brain so that machines may learn and make decisions without explicit programming. | Machine learning is a more extensive term that comprises algorithms and statistical models to enable computers to execute tasks without explicit programming. It entails creating models that can learn patterns from data and make predictions or judgments. |

| Architectural Difference | Deep learning is supported by artificial neural networks, specifically deep neural networks with several layers (deep layers). These networks learn hierarchical data representations automatically, avoiding the need for considerable manual feature engineering. | Algorithms in classical machine learning are designed to process data, extract features, and discover patterns in order to generate predictions or judgments. Feature engineering is an essential stage in traditional machine learning. |

| Feature Representation | From raw data, DL algorithms automatically learn hierarchical feature representations. The numerous layers of deep neural networks enable the model to find sophisticated patterns and characteristics on its own. | ML frequently necessitates the human extraction and selection of relevant characteristics from input data. Feature engineering is critical to ensuring that the model can learn appropriately from the data. |

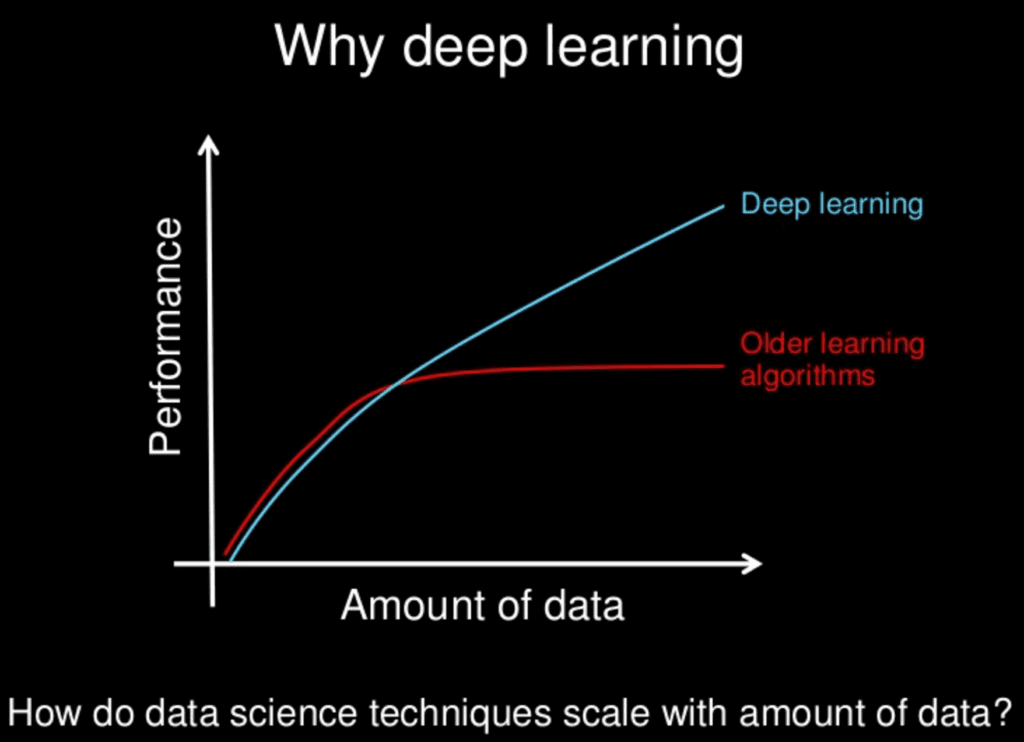

| Data Requirements | Deep learning methods, particularly deep neural networks, often mostly need a huge quantity of tagged data to learn complicated patterns and representations effectively. A large amount of data is required to adequately train deep models. | Depending on the task’s complexity, ML algorithms can deal with fewer datasets and may perform effectively with minimal amounts of labelled data. |

| Use- Cases | DL excels at tasks involving high-dimensional and complicated data, such as picture and speech recognition, natural language processing, and strategic gameplay. It has achieved notable success in computer vision and speech synthesis applications. | ML is used in a variety of fields, including linear regression, decision trees, support vector machines, and clustering methods. It is appropriate for a variety of applications, including classification, regression, and clustering. |

Example of Machine learning approach with that of deep learning:

Working on Deep Learning

By mixing data inputs, weights, and biases, deep learning neural networks—also referred to as artificial neural networks—seek to replicate the functions of the human brain.

These components collaborate to recognise, classify, and characterise objects in data effectively.

Deep neural networks are made up of multiple layers of interconnected nodes. Each layer improves and optimises the prediction or classification.

Forward propagation is the movement of calculations through a network. In a deep neural network, the visible layers are the input and output layers.

The deep learning model receives data for processing in the input layer. The final prediction or classifier is executed in the output layer.

Algorithms such as gradient descent are used to generate the prediction errors. Backpropagation, on the other hand, modifies the function weights and biases by propagating them back through the layers.

This allows the model to be trained. Forward and backward propagation work together to enable the neural network to generate predictions and correct errors. The algorithm continuously improves in accuracy over time.

In short, everything above describes the basic sort of deep neural network.

On the other hand, deep learning techniques are extremely sophisticated, and many types of neural networks exist to solve specific problems or datasets. As an example:

- Convolutional neural networks (CNNs) are widely used in computer vision (CV) and image classification applications. CNNs can identify features and patterns in an image, enabling them to perform tasks like object detection and recognition.

- Recurrent neural networks (RNNs) are commonly used in natural language and speech recognition applications Because they use sequential or time series data.

Top open source Deep Learning Tools

1. TensorFlow: TensorFlow is one of the best frameworks for natural language processing, text categorisation and summarisation, speech recognition and translation, and other tasks. It is very flexible and includes many tools and libraries to create and apply machine learning.

2. Microsoft Cognitive Toolkit (MCTK): MCTK is best suited for picture, speech, and text-based data, supporting both CNN and RNN. Users can use advanced languages to solve complex processes, and fine-grained blocks ensure seamless operation.

3. Caffe: Caffe, one of the deep learning tools designed for scale, assists machines in tracking speed, modularity, and expression. It interfaces with C, C++, Python, and MATLAB and is particularly useful for convolution neural networks.

4. Chainer: Chainer is a Python-based deep learning framework that offers automatic differentiation APIs based on the define-by-run technique (also known as dynamic computational graphs). It may also use high-level object-oriented APIs to design and train neural networks.

5. Keras: Keras is a popular choice for many because it is a framework that can function with both CNN and RNN. It is written in Python and can run on TensorFlow, CNTK, or Theano. It allows for rapid experimentation and can go from idea to result in real-time. TensorFlow is Keras’s default library.

6. Deeplearning4j: Deeplearning4j is a JVM-based, industry-focused, commercially funded, distributed deep-learning framework that is also a popular choice. The primary benefit of utilising Deeplearning4j is its speed. It is capable of quickly sorting through a substantial volume of data.

Applications across Industries

Speech and image recognition

Deep learning’s ability to recognise images and sounds is one of its most renowned achievements. Convolutional neural networks (CNNs) and other technologies have enabled robots to “see” and interpret visual input with astounding accuracy. Speech recognition using neural networks (RNN) has revolutionised human-machine interaction, advancing to the point where machines can understand and respond to spoken words.

NLP stands for Natural Language Processing:

Deep learning has reshaped language understanding and generation in the field of NLP. OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) model, for example, can generate human-like text, translate languages, and even compose poetry. These developments have far-reaching consequences for content generation, translation services, and chatbot interactions.

Innovations in Healthcare:

Deep learning has produced tremendous advances in healthcare, from diagnostic image processing to forecasting patient outcomes. Algorithms can evaluate medical pictures, detect irregularities, and help clinicians make better judgments. Early disease detection and tailored medicine have never been more possible.

Autonomous Vehicles:

Deep learning is a driving force behind the development of autonomous vehicles in the automotive sector. Neural networks interpret real-time input from sensors, cameras, and lidar, allowing vehicles to comprehend their surroundings, make judgments, and navigate safely—evidence of deep learning’s transformational power in complex, dynamic situations.

Challenges in Deep Learning

Although deep learning has made great pace in many areas, there are still certain issues that need to be resolved. The following are a few of the primary obstacles in deep learning.

- Data accessibility: Learning from vast volumes of data is necessary. Acquiring as much training data as possible is crucial for deep learning applications.

- Computational Resources: The deep learning model requires specialised technology, such as GPUs and TPUs, which makes it computationally expensive to train.

- Time-consuming: Working with sequential data can take many days or even months, depending on the computational resources available.

- Interpretability: The inner workings of deep learning models are mysterious and intricate. The outcome could be easier to understand.

- Overfitting: The model needs to perform better on new data and becomes overly specialised for the training set as a result of repeated training.

Conclusion

Deep learning is evolving as we stand on the verge of an AI-powered future. Ongoing research aims to improve model accuracy, resolve ethical problems, and improve interpretability.

Deep learning promises technological breakthroughs and restructuring of businesses, redefining what is feasible in artificial intelligence.

To summarise, deep learning is an enthralling chapter in the tale of AI, where technology meets ingenuity, and the possibilities are endless.

As we discover the mysteries of neural networks and experience their revolutionary influence, the future of deep learning promises a world in which machines understand, learn, and adapt, stretching the frontiers of what we believe imaginable in artificial intelligence.