What is Microsoft Cognitive Toolkit ?

Microsoft Cognitive Toolkit (CNTK), originally known as Computational Network Toolkit, is a free, user-friendly, open-source, commercial-grade toolkit that allows us to train deep learning algorithms to learn like the human brain. It enables us to build well-known deep learning systems such as feed-forward neural network time series prediction systems and convolutional neural network (CNN) image classifiers.

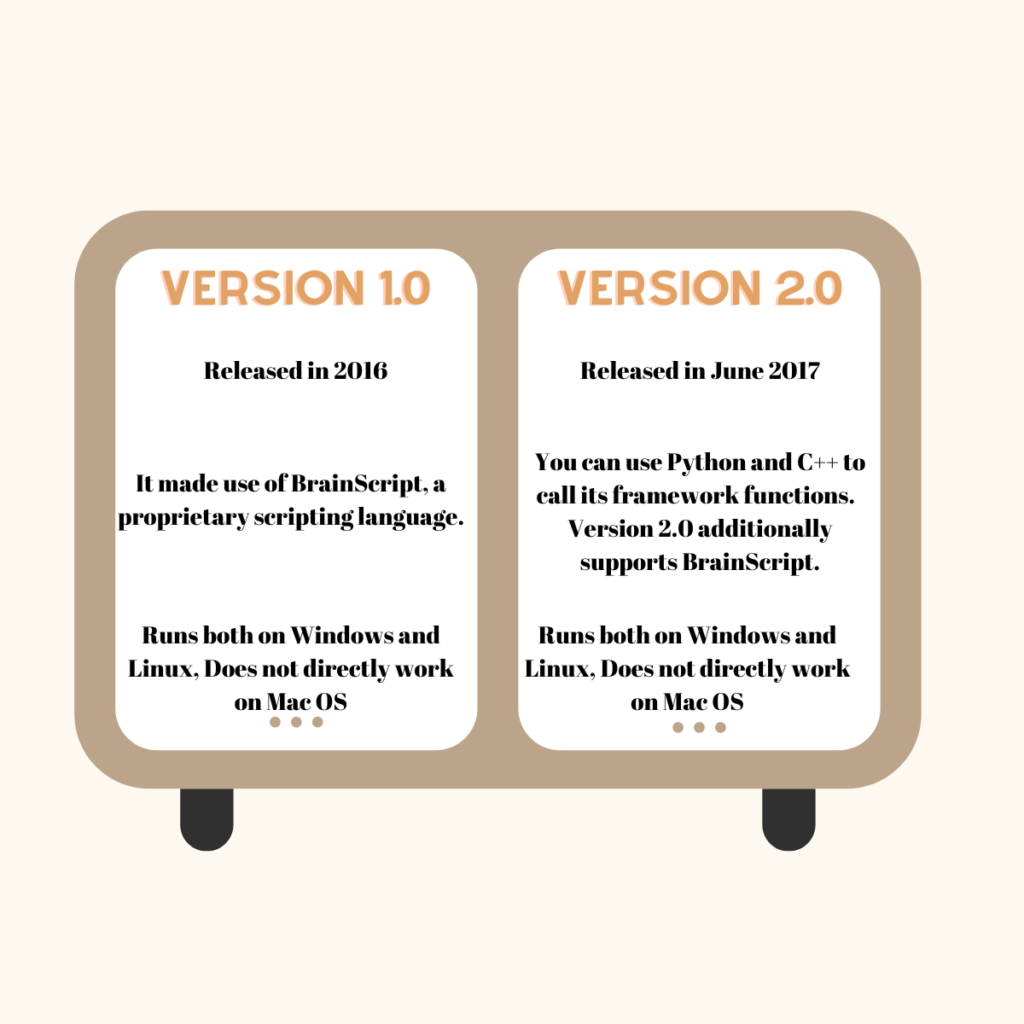

Comparison of 1.0 and 2.0 version of Microsoft Cognitive Toolkit

Features

- CNTK includes highly optimized built-in components capable of handling multidimensional dense or sparse data from Python, C++, or BrainScript.

- We can use CNN, FNN, RNN, Batch Normalization, and Sequence-to-Sequence with care.

- It allows us to add additional user-defined core components on the GPU using Python.

- It also supports automatic hyperparameter adjustment.

- We can use reinforcement learning, generative adversarial networks (GANs), supervised and unsupervised learning.

- For large datasets, CNTK has optimised readers.

Advantages of Microsoft Cognitive Toolkit

- With cutting-edge accuracy, deep learning models can be trained using it.

- It features a very robust C++ API as well as high-level, user-friendly Python APIs that are both low-level and functional programming paradigm-driven.

- Scaling it across thousands of GPUs is not difficult.

- Its support for C#,.NET, and Java inference makes it simple to include CNTK evaluation into user programs.

- It’s simple to expand from Python for learners and layers.

- Built-in readers. Effective built-in data readers in CNTK facilitate distributed learning as well.

- Since Microsoft’s internal product groups use the same toolbox, you wouldn’t be compromised in any way.

Tutorial for working with Microsoft Cognitive Toolkit:

Architecture of Microsoft Cognitive Toolkit

Microsoft Cognitive Toolkit – Sequence Classification

Tensors

Tensor is the idea that CNTK operates on. CNTK inputs, outputs, and parameters are essentially arranged as tensors, which are frequently compared to generalized matrices. Each tensor possesses a rank −

- Tensor of rank 0 is a scalar.

- Tensor of rank 1 is a vector.

- Tensor of rank 2 is a matrix.

Static and Dynamic Axes

The static axis, as their name suggests, remain the same length for the duration of the network. However, dynamic axes might differ in length from instance to instance. Actually, each minibatch is usually provided without their length being known beforehand.

Because they also define a meaningful grouping of the tensor’s integers, dynamic axes are similar to static axes.

Using sequences in the Microsoft Cognitive Toolkit

Long-Short Term Memory Network (LSTM)

Hochreiter & Schmidhuber introduced long-short term memory (LSTM) networks. It resolved the issue of achieving long-term memory retention in a basic recurrent layer. The diagram above shows the LSTM architecture. Its input neurons, memory cells, and output neurons are all visible. Long-short term memory networks use an explicit memory cell (which stores the previous values) and the following gates to counteract the vanishing gradient problem. −

- Forget gate: It instructs the memory cell to erase the prior values, as the name suggests. Until the gate, also known as the “forget gate,” instructs it to forget the values, the memory cell keeps them.

- Input gate: As the name suggests, an input gate adds new elements to the cell.

- Output Gate: The output gate determines when to forward the vectors from the cell to the subsequent hidden state, as its name suggests.

With CNTK, working with sequences is quite simple. With the help of the following example, let’s examine it:

import sys

import os

from cntk import Trainer, Axis

from cntk.io import MinibatchSource, CTFDeserializer, StreamDef, StreamDefs,\

INFINITELY_REPEAT

from cntk.learners import sgd, learning_parameter_schedule_per_sample

from cntk import input_variable, cross_entropy_with_softmax, \

classification_error, sequence

from cntk.logging import ProgressPrinter

from cntk.layers import Sequential, Embedding, Recurrence, LSTM, Dense

def create_reader(path, is_training, input_dim, label_dim):

return MinibatchSource(CTFDeserializer(path, StreamDefs(

features=StreamDef(field='x', shape=input_dim, is_sparse=True),

labels=StreamDef(field='y', shape=label_dim, is_sparse=False)

)), randomize=is_training,

max_sweeps=INFINITELY_REPEAT if is_training else 1)

def LSTM_sequence_classifier_net(input, num_output_classes, embedding_dim,

LSTM_dim, cell_dim):

lstm_classifier = Sequential([Embedding(embedding_dim),

Recurrence(LSTM(LSTM_dim, cell_dim)),

sequence.last,

Dense(num_output_classes)])

return lstm_classifier(input)

def train_sequence_classifier():

input_dim = 2000

cell_dim = 25

hidden_dim = 25

embedding_dim = 50

num_output_classes = 5

features = sequence.input_variable(shape=input_dim, is_sparse=True)

label = input_variable(num_output_classes)

classifier_output = LSTM_sequence_classifier_net(

features, num_output_classes, embedding_dim, hidden_dim, cell_dim)

ce = cross_entropy_with_softmax(classifier_output, label)

pe = classification_error(classifier_output, label)

rel_path = ("../../../Tests/EndToEndTests/Text/" +

"SequenceClassification/Data/Train.ctf")

path = os.path.join(os.path.dirname(os.path.abspath(__file__)), rel_path)

reader = create_reader(path, True, input_dim, num_output_classes)

input_map = {

features: reader.streams.features,

label: reader.streams.labels

}

lr_per_sample = learning_parameter_schedule_per_sample(0.0005)

progress_printer = ProgressPrinter(0)

trainer = Trainer(classifier_output, (ce, pe),

sgd(classifier_output.parameters, lr=lr_per_sample),progress_printer)

minibatch_size = 200

for i in range(255):

mb = reader.next_minibatch(minibatch_size, input_map=input_map)

trainer.train_minibatch(mb)

evaluation_average = float(trainer.previous_minibatch_evaluation_average)

loss_average = float(trainer.previous_minibatch_loss_average)

return evaluation_average, loss_average

if __name__ == '__main__':

error, _ = train_sequence_classifier()

print(" error: %f" % error)Output:

| average since average since examples loss last metric last —————————————————— 1.61 1.61 0.886 0.886 44 1.61 1.6 0.714 0.629 133 1.6 1.59 0.56 0.448 316 1.57 1.55 0.479 0.41 682 1.53 1.5 0.464 0.449 1379 1.46 1.4 0.453 0.441 2813 1.37 1.28 0.45 0.447 5679 1.3 1.23 0.448 0.447 11365 error: 0.333333 |